Excited to release initial insights for our Survey on Production MLOps!! The survey would still benefit from your contribution and it's OPEN FOR RESPONSES 🚀🚀🚀

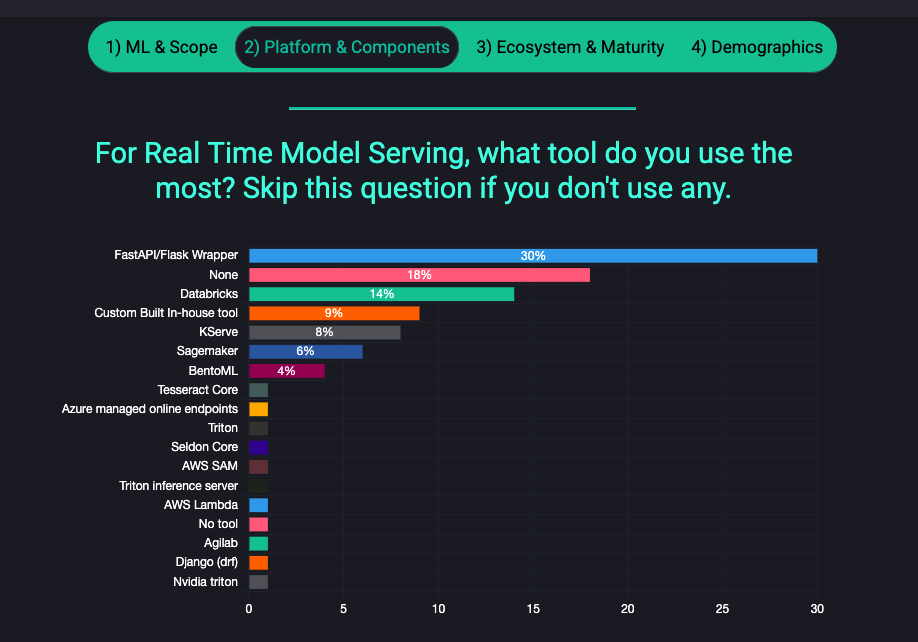

Model Serving is still unstandardised: About 40% of organisations run custom wrappers (vs 56% in 2024) for in their ML models! Databricks usage doubled to 14% (+7% YoY), followed by KServe increased to 8% (+2% YoY), and then SageMaker decreased to 6% (-3% YoY)! |

|  | If you have a few minutes, your contribution will make a significant difference to the whole production ML ecosystem 🥳 The results will be shared as open source like last year!! You can add your response directly at: https://forms.gle/KF16EckuxNUKDtDK8 🔥 |

|

|

|

|---|

|

Tabular Foundation Era Begins We're in the ImageNet moment for Tabular Data! A new Tabular Foundational Model is released with TabPFN 2.5 tackling zero-shot predictions; and the sky is the limit in a world that runs on tabular data (spreadsheets anyone?): Prior Labs is a German-based AI Lab which leads exciting research in foundation models for tabular data (+ also including time series foundation models). This past week they released a new Foundation Model that outperforms across benchmarks presented (e.g. matches AutoGluon 1.4’s 4-hour ensemble on TabArena-Lite), while showing some scalability potential (+ compared to their previous models). It is interesting to see some of the technical decisions as this has evolved for both training, but especially for inference, as with foundational models a lot of the value beyond accuracy is also on performance / accessibility. Check it out! |

|

|

|---|

|

AI-Assisted Engineering Report For better or for worse, AI-assisted engineering is fast becoming a core capability; DX released their 2025 report with important insights: 100k+ devs across 400+ orgs now show that AI coding assistants are now ubiquitous (91% adoption), with developers reporting ~3.6 hours/week saved (although still qualitative / perceived). Some interesting stats show ~22% of merged code is AI-authored, and daily users shipping a median 2.3 PRs/week (~60% m ore than non-users); however not clear on confounding e.g. high performers are also the ones likely adopting performance. Additionally, impact on quality is uneven across orgs so we should take these insights with a pinch of salt (as every week we see a contrasting view). However certainly an important space to watch, and especially after the acquisition of DX by Atlassian we can expect some further interesting insights to come up (hopefully)! |

|

|

|---|

|

The State of MLOps 2025 Survey 🔥 We are excited to release initial insights for our Survey on Production MLOps!! Model Serving is still unstandardised in 2025: About 40% of orgs still run custom wrappers (vs 56% in 2024) for in their ML models! Databricks usage doubled to 14% (+7% YoY), followed by KServe increased to 8% (+2% YoY), and then SageMaker decreased to 6% (-3% YoY)!We still need your support to continue collecting diverse perspectives to map the ecosystem! Please help us with your response, as well as by sharing with your colleagues 🚀🚀🚀 If you have a few minutes, your contribution will make a significant difference to the whole production ML ecosystem 🥳 The results will be shared as open source like last year!! You can add your response directly at: https://forms.gle/KF16EckuxNUKDtDK8🔥 |

|

|

|---|

|

PyTorch Agent Framework (+RL) Agentic systems are becoming ubiquitous, and the PyTorch team enters the race with a new agentic framework TorchForge powered by their new distributed actor framework Monarch; let's dive into some of the features: PyTorch Monarch introduces a single-controller distributed model that lets one Python script orchestrate large clusters via process/actor meshes similar to frameworks like Ray. This brings some benefits such as progressive fault handling, separate control/data plane, distributed tensors, and simplified heterogeneous pipelines like RL post-training. It is a PyTorch-native reinforcement learning library that lets you write rollout/training logic as async "pseudocode", and doing the heavylifting of handles coordination, retries, weight sync, and resharding. The biggest open question on my side is how this fits into Ray given the recent announcement of the project joining the PyTorch (Linux) Foundation, as I assume we should expect some interesting synergies! |

|

|

|---|

|

Bench for Semantic Query Engines Today LLMs powered systems rely heavily on retrieval engines; this new benchmark for semantic query engines provides some interesting baselines that hopefully will help improve agentic systems across the industry: SemBench is a systems benchmark for semantic query processing engines that run end-to-end, multimodal queries over text, images, and audio. This benchmark is different as it measures the real production trade-offs of cost (ie. token fees), latency, and quality rather than just model accuracy. This benchmark packages 5 scenarios and 55 queries with ground-truth labels, and reports F1/relative error/Spearman/ARI alongside execution time and monetary spend. This seems quite handy as it enables for teams that are developing these systems to compare plans and optimizations on equal footing. |

|

|

|---|

|

Upcoming MLOps Events The MLOps ecosystem continues to grow at break-neck speeds, making it ever harder for us as practitioners to stay up to date with relevant developments. A fantsatic way to keep on-top of relevant resources is through the great community and events that the MLOps and Production ML ecosystem offers. This is the reason why we have started curating a list of upcoming events in the space, which are outlined below. Upcoming conferences where we're speaking:

Other upcoming MLOps conferences in 2025:

In case you missed our talks:

|

|

|---|

| | |

Check out the fast-growing ecosystem of production ML tools & frameworks at the github repository which has reached over 10,000 ⭐ github stars. We are currently looking for more libraries to add - if you know of any that are not listed, please let us know or feel free to add a PR. Four featured libraries in the GPU acceleration space are outlined below. - Kompute - Blazing fast, lightweight and mobile phone-enabled GPU compute framework optimized for advanced data processing usecases.

- CuPy - An implementation of NumPy-compatible multi-dimensional array on CUDA. CuPy consists of the core multi-dimensional array class, cupy.ndarray, and many functions on it.

- Jax - Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

- CuDF - Built based on the Apache Arrow columnar memory format, cuDF is a GPU DataFrame library for loading, joining, aggregating, filtering, and otherwise manipulating data.

If you know of any open source and open community events that are not listed do give us a heads up so we can add them! |

|

|---|

| | |

As AI systems become more prevalent in society, we face bigger and tougher societal challenges. We have seen a large number of resources that aim to takle these challenges in the form of AI Guidelines, Principles, Ethics Frameworks, etc, however there are so many resources it is hard to navigate. Because of this we started an Open Source initiative that aims to map the ecosystem to make it simpler to navigate. You can find multiple principles in the repo - some examples include the following: - MLSecOps Top 10 Vulnerabilities - This is an initiative that aims to further the field of machine learning security by identifying the top 10 most common vulnerabiliites in the machine learning lifecycle as well as best practices.

- AI & Machine Learning 8 principles for Responsible ML - The Institute for Ethical AI & Machine Learning has put together 8 principles for responsible machine learning that are to be adopted by individuals and delivery teams designing, building and operating machine learning systems.

- An Evaluation of Guidelines - The Ethics of Ethics; A research paper that analyses multiple Ethics principles.

- ACM's Code of Ethics and Professional Conduct - This is the code of ethics that has been put together in 1992 by the Association for Computer Machinery and updated in 2018.

If you know of any guidelines that are not in the "Awesome AI Guidelines" list, please do give us a heads up or feel free to add a pull request!

|

|

|---|

| | |

| | | | The Institute for Ethical AI & Machine Learning is a European research centre that carries out world-class research into responsible machine learning. | | | | |

|

|

|---|

|

|

This email was sent to You received this email because you are registered with The Institute for Ethical AI & Machine Learning's newsletter "The Machine Learning Engineer"

|

| | | | |

|

|

|---|

|

© 2023 The Institute for Ethical AI & Machine Learning |

|

|---|

|

|

|

The Institute for Ethical AI & Machine Learning

The Institute for Ethical AI & Machine Learning