Excited to release initial insights for our Survey on Production MLOps!! The survey would still benefit from your contribution and it's OPEN FOR RESPONSES 🚀🚀🚀

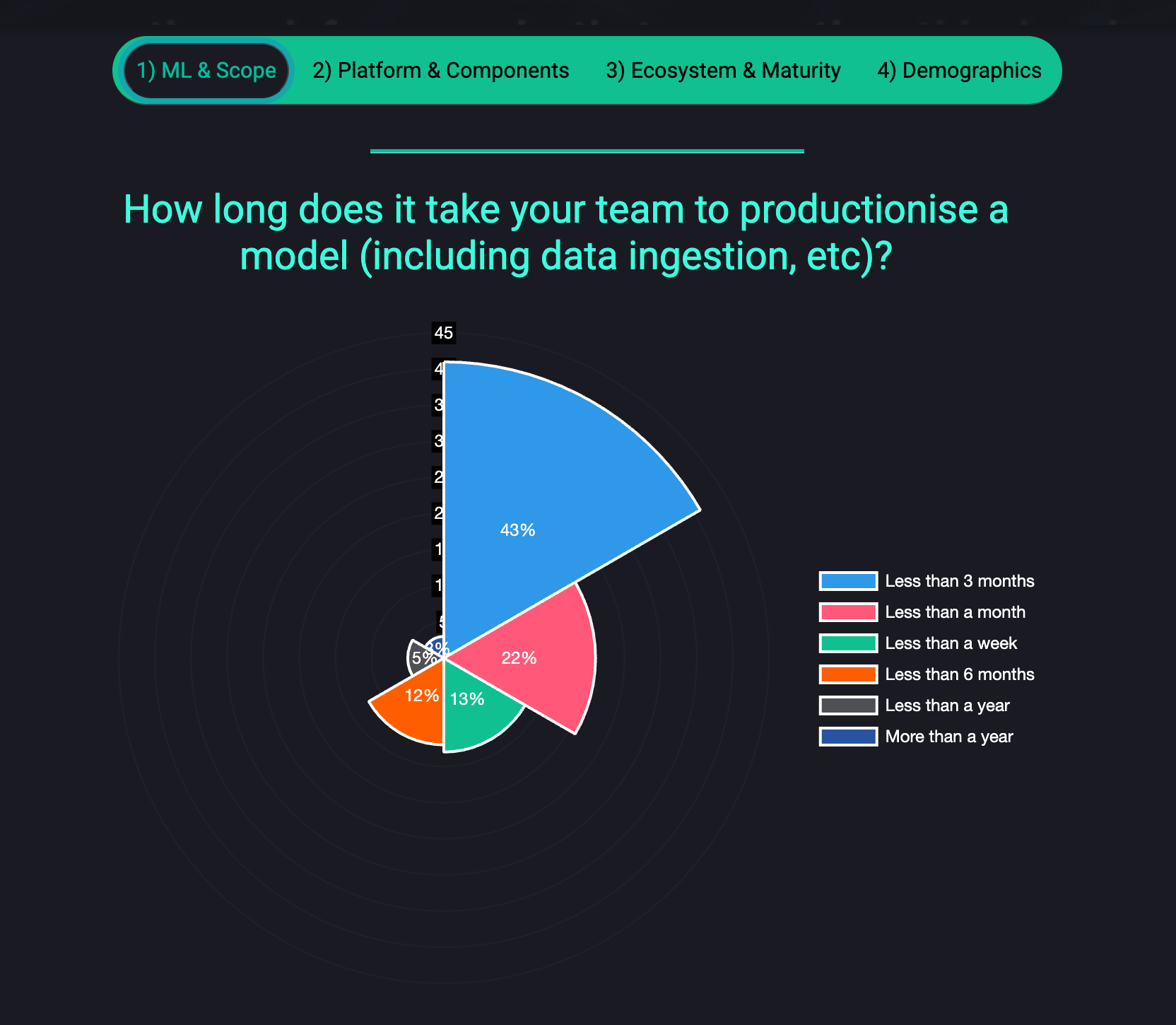

In 2025 about 43% of organisations take between 1-3 months to productionise a machine learning model (vs 2024 28%) and only 12% between 3-6 months (vs 25% in 2024) which is great news for the industry! Similarly 13% are now productionising ML models in less than one week (vs 10% in 2024). This is certainly a healthy trend that we hope continues; however with the rise of emerging tech like LLMs we do expect to see oscilations! This is one the most important muscles for organisations to strengthen as it directly relates to their capability to scale AI innovation. |

|  | If you have a few minutes, your contribution will make a significant difference to the whole production ML ecosystem 🥳 The results will be shared as open source like last year!! You can add your response directly at: https://forms.gle/KF16EckuxNUKDtDK8 🔥 |

|

|

|

|---|

|

Black Forest Labs Launches FLUX-2

Some of you may remember the release of the FLUX model from Black Forest Labs last year taking image generation to a whole new level; this week they have now released the 2.0 version with some impressive improvements: This new latent flow–based image generation model is now focused on production creative workflows with what they refer to as multi-reference editing, which allows editing up to 10 images in a single architecture. The approach basically pairs a Mistral-3 24B VLM with a transformer and VAE which helps with the compression trade-off, which is what enables the higher photorealism, spatial coherence, and robust typography (i.e. infographics, UI mocks, fine text no longer screwed up). It is quite surprising to see the improvement of image models not slowing down; if anything it's just the opposite, and it really is enabling for real-world applications across all industries and domains. |

|

|

|---|

|

Major Supply Chain Attacks Last week we saw a major reminder to us ML practitioners on software supply chain attacks, this one infected ~500 packages including major ones from Zapier, ENS, AsyncAPI, PostHog, Postman and others that amount to 100M+ monthly downloads: The way that this specific attack worked was that once you run your package with one of these dependencies on your dev machine or CI runner, it uses TruffleHog to harvest secrets (API keys, cloud creds, GitHub/npm tokens, etc) and pushes them to public GitHub repos labeled "Sha1-Hulud: The Second Coming". The attack replicates the malicious code and then attempts to publish new infected npm packages; finally wipes the user’s home directory if it can't authenticate. For us ML practitioners, this is our weekly reminder on the importance of security in areas where it's not currently mature; i.e. model registries and artifacts, data/feature store credentials, cloud keys, CI/CD access used to build training and inference images, etc. These stories are becoming scarier by the minute, as renowned packages are now falling for some of these issues; this will become a call-to-action for even the design of the package managers themselves, but most importantly to remember on standard security higene where possible. |

|

|

|---|

|

The State of MLOps 2025 Survey 🔥 In 2025 about 43% of organisations take between 1-3 months to productionise a machine learning model (vs 2024 28%) and only 12% between 3-6 months (vs 25% in 2024) which is great news for the industry! Similarly 13% are now productionising ML models in less than one week (vs 10% in 2024). This is certainly a healthy trend that we hope continues; however with the rise of emerging tech like LLMs we do expect to see oscilations! This is one the most important muscles for organisations to strengthen as it directly relates to their capability to scale AI innovation. We still need your support to continue collecting diverse perspectives to map the ecosystem! Please help us with your response, as well as by sharing with your colleagues 🚀🚀🚀 If you have a few minutes, your contribution will make a significant difference to the whole production ML ecosystem 🥳 The results will be shared as open source like last year!! You can add your response directly at: https://forms.gle/KF16EckuxNUKDtDK8🔥 |

|

|

|---|

|

Raschka on Building an LLM Sebastian Raschka and Andrej Karpathy's educational material is becoming a key cornerstone for foundational ML knowledge; and Raschka's recent course on "Building an LLM (From Scratch)" is becoming a must-watch classic: This course walks ML practitioners through implementing a GPT-style model end-to-end in plain PyTorch, starting from text preprocessing and tokenization. It goes through hand-built attention and Transformer blocks to a full GPT architecture with training and generation which is great to build the intuition. Along the way in this course, you implement next-token pretraining on unlabeled text, and then repurpose the pretrained backbone for supervised tasks like text classification and simple chat-style behavior. There are quite a lot of great takeaways, but these approaches of learning by building something from scratch often are such a great way to develop the foundations before going to the higher level / advanced domains (i.e. building agentic frameworks, transfer learning, etc). |

|

|

|---|

|

2025 Advent of Code In production ML we are reminded that your AI systems can only be as robust as the engineering discipline behind them; what better way to polish your foundations than with the Advent of Code for 2025: It's great to see that despite the LLM craze the Advent of Code is still back! We did see that last year(s) they had to fight an insurgence of LLM-content in the leaderboards, which is why there is none this year but you can still practice. The puzzles unlock TONIGHT at midnight US Eastern! Do keep an eye and try them out - only caveat is that this time around there will only be 12 instead of 24, but this should keep us busy for the upcoming month! On this topic funnily enough I ended up attending to build a custom parser, lexer and interpreter for a custom language that I was also wanted to explore testing with this - still WIP but pretty mind blowing how these tools are evolving to enable these. |

|

|

|---|

|

Upcoming MLOps Events The MLOps ecosystem continues to grow at break-neck speeds, making it ever harder for us as practitioners to stay up to date with relevant developments. A fantsatic way to keep on-top of relevant resources is through the great community and events that the MLOps and Production ML ecosystem offers. This is the reason why we have started curating a list of upcoming events in the space, which are outlined below. Upcoming conferences where we're speaking:

Other upcoming MLOps conferences in 2025:

In case you missed our talks:

|

|

|---|

| | |

Check out the fast-growing ecosystem of production ML tools & frameworks at the github repository which has reached over 10,000 ⭐ github stars. We are currently looking for more libraries to add - if you know of any that are not listed, please let us know or feel free to add a PR. Four featured libraries in the GPU acceleration space are outlined below. - Kompute - Blazing fast, lightweight and mobile phone-enabled GPU compute framework optimized for advanced data processing usecases.

- CuPy - An implementation of NumPy-compatible multi-dimensional array on CUDA. CuPy consists of the core multi-dimensional array class, cupy.ndarray, and many functions on it.

- Jax - Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

- CuDF - Built based on the Apache Arrow columnar memory format, cuDF is a GPU DataFrame library for loading, joining, aggregating, filtering, and otherwise manipulating data.

If you know of any open source and open community events that are not listed do give us a heads up so we can add them! |

|

|---|

| | |

As AI systems become more prevalent in society, we face bigger and tougher societal challenges. We have seen a large number of resources that aim to takle these challenges in the form of AI Guidelines, Principles, Ethics Frameworks, etc, however there are so many resources it is hard to navigate. Because of this we started an Open Source initiative that aims to map the ecosystem to make it simpler to navigate. You can find multiple principles in the repo - some examples include the following: - MLSecOps Top 10 Vulnerabilities - This is an initiative that aims to further the field of machine learning security by identifying the top 10 most common vulnerabiliites in the machine learning lifecycle as well as best practices.

- AI & Machine Learning 8 principles for Responsible ML - The Institute for Ethical AI & Machine Learning has put together 8 principles for responsible machine learning that are to be adopted by individuals and delivery teams designing, building and operating machine learning systems.

- An Evaluation of Guidelines - The Ethics of Ethics; A research paper that analyses multiple Ethics principles.

- ACM's Code of Ethics and Professional Conduct - This is the code of ethics that has been put together in 1992 by the Association for Computer Machinery and updated in 2018.

If you know of any guidelines that are not in the "Awesome AI Guidelines" list, please do give us a heads up or feel free to add a pull request!

|

|

|---|

| | |

| | | | The Institute for Ethical AI & Machine Learning is a European research centre that carries out world-class research into responsible machine learning. | | | | |

|

|

|---|

|

|

This email was sent to You received this email because you are registered with The Institute for Ethical AI & Machine Learning's newsletter "The Machine Learning Engineer"

|

| | | | |

|

|

|---|

|

© 2023 The Institute for Ethical AI & Machine Learning |

|

|---|

|

|

|

The Institute for Ethical AI & Machine Learning

The Institute for Ethical AI & Machine Learning