AI-RFX Procurement Framework v1.0

Machine Learning Maturity Model, AI & Machine Learning Solutions

0 - Introduction

0.1 - Overview

This “Machine Learning Maturity Model v1.0” is part of the AI-RFX Procurement Framework, and it is the core of all the templates including the ”AI Request for Proposal Template” & the “AI Tender Competition Template”.

The Machine Learning Maturity Model is an extension of The Principles for Responsible Machine Learning, which aims to convert the high level Responsible ML Principles into a practical checklist-style assessment criteria. This “checklist” goes beyond the machine learning algorithms themselves, and provides an assessment criteria to evaluate the maturity of the infrastructure and processes around the algorithms. The concept of “Maturity” is not just defined as a matter of technical excellence, scientific rigor, and robust products. It also essentially involves responsible innovation and development processes, with sensitivity to the relevant domains of expert knowledge and consideration of all relevant direct and indirect stakeholders.

The Machine Learning Maturity Model should be a subset of the overall assessment criteria required to evaluate a proposed solution, and it is specific to the machine learning piece. It should be complemented with a traditional assessment of other areas such as the specific features requested, services needed, and more domain-specific areas.

Each of the criteria was designed to be linked to each one of the Principles for Responsible Machine Learning, and consists of the following:

|

# |

Assessment Criteria |

Responsible ML Principle |

|

#1 |

Practical benchmarks |

|

|

#2 |

Explainability by justification |

|

|

#3 |

Infrastructure for reproducible operations |

|

|

#4 |

Data and model assessment processes |

|

|

#5 |

Privacy enforcing infrastructure |

|

|

#6 |

Operational process design |

|

|

#7 |

Change management capabilities |

|

|

#8 |

Security risk processes |

0.2 - About Us

The Institute for Ethical AI & Machine Learning is a Europe-based research centre that carries out world class research into responsible machine learning systems. We are formed by cross functional teams of applied STEM researchers, philosophers, industry experts, data scientists and software engineers.

Our vision is to mitigate risks of AI and unlock its full potential through frameworks that ensure ethical and conscientious development of intelligent systems across industrial sectors. We are building the Bell Labs of the 21st Century by delivering breakthrough contributions through applied AI research. You can find more information about us at https://ethical.institute.

0.3 - Motivation

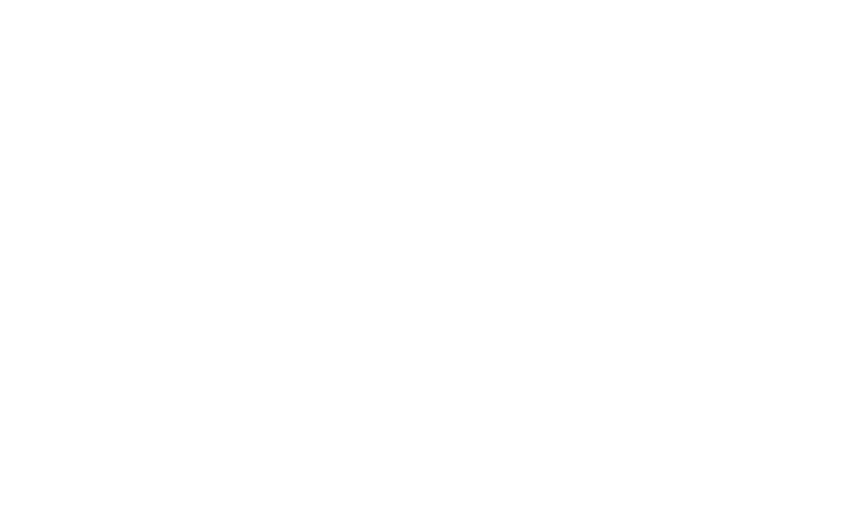

There is currently a growing number of companies that are working towards introducing machine learning systems to automate critical processes at scale. This has required the “productisation” of machine learning models, which introduces new complexities. This complexity revolves around a new set of roles that fall under the umbrella of “Machine Learning Engineering”. This new set of roles fall in the intersection between DevOps, data science and software engineering.

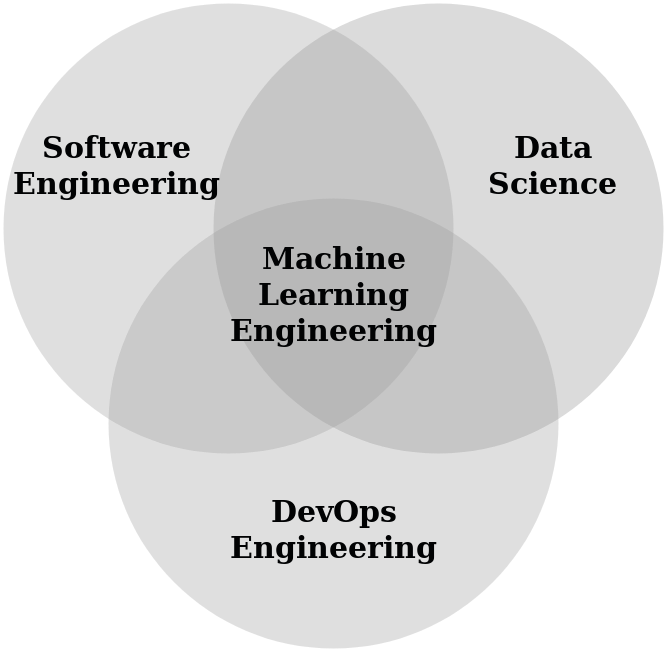

To make things harder, the deployment of machine learning solutions in industry introduces an even bigger complexity. This involves the intersection of the new abstract “Machine Learning Engineering” roles, together with the industry domain experts and policy makers.

Because of this, there is a strong need to set the AI & ML standards, so practitioners are empowered to raise the bar for safety, quality and performance around AI solutions. The AI-RFX Procurement Framework aims to achieve the first steps towards this.

0.4 - How to use this document

0.4.1 - Using as reference

Many procurement managers may already own internally-approved assessment criteria. If that is the case, this document can be treated as a reference to obtain insights on key areas that should be taken into consideration when procuring and evaluation an AI / Machine Learning solution.

0.4.2 - Structure

Each subsection below consists of a detailed explanation of the criteria. It is followed by an summary overview of the requirements expected by the suppliers. Finally it contains a set of detailed questions that the supplier is expected to answer whether explicitly or implicitly in their proposal, together with red flags to look out for in each of the detailed questions.

0.4.3 - Example

The Machine Learning Maturity Model was used to build the ”AI Request for Proposal Template” & the “AI Tender Competition Template”, which are part of the AI-RFX Procurement Framework.

0.4.4 - When to use

This template is relevant only for the procurement of machine learning systems, and hence it is only suitable when looking to automate a process that involves data analysis that is too complex to be tackled using simple RPA tools or rule-based systems.

0.5 - Template vs Reality

This document should serve as a guide, and doesn’t require everything to be completed exactly as it’s stated. Especially for smaller projects, the level of detail required may vary significantly, and some sections can be left out as required. This template attempts to to provide a high level overview on each chapter (and respective sections) so the procurement manager and suppliers can provide as much content as reasonable.

0.6 - Open Source License - Free as in freedom

0.6.1 - Open source License

This document is open source, which means that it can be updated by the community. The motivation to release this as open source is so that it is continuously improved by the community. This will ensure that the standards for safety, quality and performance of what is expected in machine learning systems will keep increasing, whilst being kept in check on a realistic level by both suppliers and companies.

0.6.2 - Contributing.md

The Institute for Ethical AI & Machine Learning’s AI-RFX committee is in charge of the contributing community for all of the templates under the AI-RFX Procurement Framework. Anyone who would like to contribute, add suggestions, or provide example and practical uses of this template, please contact us through the website, or send us an email via a@ethical.institute.

0.6.3 - License

This document is registered under this MIT License (raw file), which means that anyone can re-use, modify or enhance this document as long as credit is given to The Institute for Ethical AI & Machine Learning. It also includes an “as is” disclaimer. Please read the license before using this template.

Machine Learning Maturity Model

1 - Practical benchmarks

This Machine Learning Maturity Model assessment criteria is directly aligned with the Responsible Machine Learning Principle #6 - Practical accuracy.

Explanation

-

Having the right benchmark metrics is one of the most important points to consider during the evaluation of machine learning solutions. Relevant benchmarks that are considered in this section include accuracy, time, time-to-accuracy, and computational resources.

-

The criteria of what makes good benchmarks can vary significantly depending on the task complexity, dataset size, etc. However the objective of this criteria is to assess that suppliers are able to follow best practices in data science, and make sure these are aligned with the use-case requirements.

Requirements

-

Suppliers must be able to demonstrate best practices in software development, data science and industry-specific knowledge when presenting benchmarks. These benchmarks include:

-

Time - Supplier must provide estimated processing times

-

Accuracy - Supplier must provide metrics beyond accuracy as relevant

-

Time-to-accuracy - Supplier must provide information on the estimate time and resources it takes to train new models to a reasonable accuracy

-

Computational resources - Supplier must provide insight on computational resources required for efficient use of their system

-

|

# |

Question |

Red flags |

|

1.1 |

Does the supplier have a process and/or infrastructure to make available statistical metrics beyond accuracy? |

|

|

1.2 |

Does the supplier have a process to ensure their machine learning evaluation metrics (i.e. cost functions & benchmarks) are aligned to the objective of the use-case? |

|

|

1.3 |

Does the supplier have a process to validate the way they evaluate predictions as correct or incorrect? |

|

|

1.4 |

Does the supplier use reasonable statistical methods when comparing performance of different models? |

|

|

1.5 |

Does the supplier provide comprehensible information on the time performance of their solution? |

|

|

1.6 |

Does the supplier provide comprehensible estimates on time and resources required to develop a model from scratch to a reasonable accuracy? |

|

|

1.7 |

Does the supplier provide minimum and recommended system requirements? |

|

|

1.8 |

Does the supplier provide comprehensible documentation around their benchmarks? |

|

|

1.9 |

Does the supplier ensure staff in the benchmark processes have the right exp.? |

|

2 - Explainability by justification

This Machine Learning Maturity Model assessment criteria is directly aligned with the Responsible Machine Learning Principle #3 - Explainability by justification.

Explanation

-

When domain experts are asked how they came to a specific conclusion, they don’t answer by pointing to the neurons that fired in their brains. Instead domain experts provide a “justifiable” explanation of how they came to that conclusion.

-

Similarly, with a machine learning model the objective is not to demand an explanation for every single weight in the algorithm. Instead, we look for a justifiable level of reasoning on the end-to-end process around and within the algorithm.

-

The level of scrutiny for an explanation to be “justifiable” will most certainly vary depending on the critical nature of the use-case, as well as the level of feedback that can be analysed by humans.

Requirements

-

This criteria is heavily dependent on Criteria 1 - Practical benchmarks, as suppliers have the right processes and capabilities around their accuracy metrics.

-

Suppliers must make a reasonable case about how their solution (or solution + human) will be able to provide at least the same level (or higher) of justification when making a final decision on an instance of data analysis as a domain expert would.

-

In order for suppliers to propose at least the same level of justification, they must also provide the current level of justification as a benchmark, from a quantitative perspective.

|

# |

Question |

Red flags |

|

2.1 |

Does the supplier provide audit trails to assess the data that went through the models? |

|

|

2.2 |

Does the supplier have a process and/or infrastructure to explain input/feature importance? |

|

|

2.3 |

Does the supplier provide capabilities to explain how input/features affect results? |

|

|

2.4 |

Does the supplier have the process and/or infrastructure to use model explainability techniques when developing deep learning / more complex models? |

|

|

2.5 |

Does the supplier have process and/or infrastructure to work with domain experts to abstract their knowledge into models? |

|

|

2.6 |

Does the supplier provide comprehensible information around their explainability processes? |

|

|

2.7 |

Does the supplier ensure the staff involved in the explainability processes have the right experience? |

|

3 - Data and model assessment processes

This Machine Learning Maturity Model assessment criteria is directly aligned with the Responsible Machine Learning Principle #2: Bias evaluation.

Explanation

-

Any any non-trivial decisions (defined as having more than 1 option) always carry an inherent bias without exception

-

Hence the objective is not to remove bias from a machine learning completely. Instead, the objective is to ensure that the "desired bias" is aligned with our accuracy/objectives, and "undesired bias" is identified and mitigated.

-

To be more specific, bias in machine learning boils down to the error between development and production. As a result of this, all machine learning models start to “degrade” as soon as they are put in production. The reasons for this include:

-

Unseen data is not representative to the data used in development

-

Temporal data changes as time goes on (e.g. inflation affects price)

-

Human-generated data changes as people and projects change

-

-

Bias in machine learning is a challenge that can be tackled by ensuring there are processes in place to identify, document and mitigate bias

Requirements

-

This assessment criteria is heavily dependent on Criteria 1 - Explainability by justification, and Criteria 2 - Practical benchmarks being in place.

-

Suppliers must be able to demonstrate processes and infrastructure they have to identify undesired bias through best practices in data science as well as awareness of domain-specific considerations

The Institute for Ethical AI & Machine Learning is working with the IEEE p7003 working group to develop the p7003 Algorithmic Bias Considerations standard that will facilitate this assessment criteria once it is released as suppliers that obtain this certification will verify that they have the relevant process towards data and model assessment.

|

# |

Question |

Red flags |

|

3.1 |

Does the supplier have a process to assess representability of datasets? |

|

|

3.2 |

Does the supplier have a process to identify and document undesired biases during the development of their models? |

|

|

3.3 |

Does the supplier have capabilities to track performance metrics in production to identify and mitigate new bias? |

|

|

3.4 |

Does the supplier provide comprehensible information around their data and model evaluation processes? |

|

|

3.5 |

Does the supplier demonstrate the team they have allocated has the right expertise to perform the data and model assessment efficiently? |

|

4 - Infrastructure for reproducible operations

This Machine Learning Maturity Model assessment criteria is directly aligned with the Responsible Machine Learning Principle #4 - Reproducible operations.

Explanation

-

Similar to production software, machine learning requires infrastructure to ensure reliable and robust service offerings

-

Different to traditional software however, machine learning introduces complexities beyond the code, such as versioning and orchestration of models

-

This requirements demand the suppliers to be conscious of this, and ensure their infrastructure is able to cope with these challenges

Requirements

-

Suppliers must also be able to demonstrate their capabilities to version, roll-back, diagnose and/or deploy models to production

-

Suppliers must have the processes and/or infrastructure to be able to separate the development of new models (i.e. new capabilities) from the serving in production of the models

-

Suppliers must demonstrate the ability to scale their services as required by the use-case.

|

# |

Question |

Red flags |

|

4.1 |

Does the supplier have process and/or infrastructure to version models? |

|

|

4.2 |

Does the supplier have process/infrastructure to re-train previous version of models? |

|

|

4.3 |

Does the supplier have a clear separation for their workflows around development and production of models? |

|

|

4.4 |

Does the supplier have a QA/BETA process within their machine learning lifecycle? |

|

|

4.5 |

Does the supplier have a reasonable process to deploy and revert back models in production? |

|

|

4.6 |

Does the supplier have the infrastructure to reproduce and diagnose errors observed in production efficiently? |

|

|

4.7 |

Does the supplier have the capabilities to scale their computation horizontally? |

|

|

4.8 |

Does the supplier have the capabilities to scale their computation vertically? |

|

|

4.9 |

Does the supplier have the capabilities to provision the relevant resources required by for the computation of the models across their infrastructure? |

|

|

4.10 |

Does the supplier have a stable release cycle and method to provide updates to machine learning infrastructure? |

|

|

4.11 |

Does the supplier provide the required functionality to extend the features provided? |

|

|

4.12 |

Does the supplier provide comprehensible documentation on the infrastructure around their machine learning? |

|

|

4.13 |

Does the supplier demonstrate the team they have allocated for the design, development and delivery of the machine learning infrastructure have the right expertise? |

|

5 - Privacy enforcing infrastructure

This Machine Learning Maturity Model assessment criteria is directly aligned with the Responsible Machine Learning Principle #7 - Trust beyond the user.

Explanation

-

Both suppliers and companies have a responsibility to protect the user’s privacy when their data is collected and stored into the solution.

-

Different to the B2C world where there might be a clear user, in B2B solutions there may be a large number of different user types that interact with the solution (directly and indirectly), increasing the complexity of data protection.

-

Whilst section “8 - Security risks processes” focuses on addressing data security risks from “external” threats, this section focuses on mitigating privacy violations that can arise from “internal” parties (whether intentional or unintentional)

Requirements

-

Suppliers must demonstrate awareness and capability to protect privacy of users in the platform across multiple levels.

-

The right communication channels should be established to ensure that all the stakeholders from the supplier are capable of separating and protecting personally identifiable information where reasonable.

-

When anonymization techniques are used, suppliers must demonstrate the use of reasonable techniques that can mitigate re-identification attacks.

|

# |

Question |

Red flags |

|

5.1 |

Does the supplier have the process and/or infrastructure to restrict access to user data? |

|

|

5.2 |

Does the supplier have processes and/or infrastructure in place to ensure data is anonymised where reasonable? |

|

|

5.3 |

Does the supplier have capabilities to ensure privacy protection on the data that is used on models? |

|

|

5.4 |

Does the supplier have the internal capabilities to ensure compliance with the relevant regulations? |

|

|

5.5 |

Does the supplier have the capability to ensure privacy protection on the metadata contained within their models? |

|

|

5.6 |

Does the supplier have processes in place that ensure user privacy measures are in place based on the consent that has been given? |

|

|

5.7 |

Does the supplier provide comprehensible documentation on the infrastructure around their machine learning? |

|

|

5.8 |

Does the supplier demonstrate the team they have allocated has the right expertise to ensure the required level of privacy is provided to the users in the system? |

|

6 - Operational process design

This Machine Learning Maturity Model assessment criteria is directly aligned with the Responsible Machine Learning Principle #1: Human augmentation.

Explanation

-

This criteria focuses on the processes required for the responsible operation of the solution. It assesses whether the proposed process takes into consideration the fail-safe steps in place to mitigate the impact of errors / incorrect predictions.

-

The operational process involves the full end-to-end steps that are performed around the machine learning system, including human intervention or analysis at any relevant step of the process

Requirements

-

This criteria is heavily dependent on Criteria 3 - Data and model assessment process, as suppliers have the plan in place in order for the operational/business/digital transformation to take place.

-

The main objective of this criteria is to ensure suppliers show explicitly they have considered the impact of incorrect predictions or errors, and are able to mitigate some of these through the operational steps introduced beyond the technology.

|

# |

Question |

Red flags |

|

6.1 |

Does the supplier have a process to assess the need for a human-in-the-loop design process based on the impact of incorrect predictions? |

|

|

6.2 |

Does the supplier have a process to assess whether a human-in-the-loop review process is or isn’t necessary? |

|

|

6.3 |

Does the supplier have a process to assess whether a temporary human-in-the-loop process is necessary? |

|

|

6.4 |

Does the supplier have a process to assess whether a scheduled human review is or isn’t necessary after the deployment of a model? |

|

|

6.5 |

Is the supplier able to ensure the right domain experts are involved in the human in the loop review process? |

|

|

6.6 |

Does the supplier demonstrate the team they have allocated has the right expertise to ensure safety, quality and performance in the design of the operational process? |

|

7 - Change management capabilities

This Machine Learning Maturity Model assessment criteria is directly aligned with the Responsible Machine Learning Principle #5 - Displacement strategy.

Explanation

-

When performing any large-scale IT projects it is important to have the right change management plans in place. With AI & machine learning systems it is no different.

-

Large-scale machine learning systems are often introduced to create a new data analysis capability and/or automate an existing process. This results in people that will need to be re-trained to use the systems in the right way, as well as ensuring that the gains in efficiency are distributed by allocating the now available time accordingly.

Requirements

-

This criteria is heavily dependent on Criteria 6 - Operational Process Design, as suppliers have the plan in place in order for the operational/business/digital transformation to take place.

-

For larger system rollouts, suppliers must be able to demonstrate the right processes and infrastructure to deal with the impact of their proposed automation, in regards to the stakeholders that are being partially or fully automated.

-

Suppliers must also be able to show they have the processes and infrastructure to perform training and handover for relevant stakeholders that will be operating the solution

|

# |

Question |

Red flags |

|

7.1 |

Does the supplier provide a comprehensible plan to roll out the solution internally? |

|

|

7.2 |

Does the supplier have capability to carry out the business change plan proposed? |

|

|

7.3 |

Does the supplier have the capability to provide training for stakeholders that will operate the solution? |

|

|

7.4 |

Does the supplier provide a comprehensible plan for handover? |

|

|

7.5 |

Does the supplier have a process and/or capability to support the transition of stakeholders that are automated? |

|

|

5.7 |

Does the supplier provide comprehensible documentation and material on change management plans and training material? |

|

|

5.8 |

Does the supplier demonstrate the team they have allocated has the right expertise to perform the change management delivery, trainings and handover? |

|

8 - Security risk processes

This Machine Learning Maturity Model assessment criteria is directly aligned with the Responsible Machine Learning Principle #8 - Data risk awareness.

Explanation

-

This section focuses on the “external” threats & risks around the data contained in the systems proposed by the solution.

-

This section doesn’t only require suppliers to have the right technical safeguards, but also to have the right education for relevant users that will interact with potentially complex systems

-

This section should be complementary to the standard security questions that are often provided through questionnaires in tender processes.

Requirements

-

Suppliers must demonstrate awareness around data and system security, including around their machine learning models, as well as the infrastructure around it.

-

Suppliers should provide an overview of how their systems as well as processes are secured.

-

Suppliers should demonstrate they have the right processes in place around stakeholders that operate the solution

-

Suppliers should demonstrate they have the right processes in place to educate the stakeholders that operate the solution

|

# |

Question |

Red flags |

|

8.1 |

Does the supplier have processes to ensure only privileged users have access |

|

|

8.2 |

Does the supplier ensure all machine learning model data is encrypted at transport? |

|

|

8.3 |

Does the supplier ensure all machine learning model data is encrypted at rest? |

|

|

8.4 |

Does the supplier have processes and/or infrastructure in place to ensure that all the encryption keys and relevant passwords are not shared or repeated across deployments? |

|

|

8.5 |

Does the supplier have processes and/or infrastructure to assess the level of protection require based on exposure? |

|

|

8.6 |

Does the supplier have a process to ensure confidential information is not exposed through logs or other mediums of metrics? |

|

|

8.7 |

Does the supplier have processes and/or infrastructure to ensure system access is restricted to privileged users as required? |

|

|

8.8 |

Does the supplier demonstrate the team they have allocated has the right expertise to fortify their machine learning infrastructure against external threats? |

|